This article to describes how to add an existing cluster into ClusterControl.

Supported clusters are:

- Galera Clusters

- Collection of MySQL Servers

- Mongodb/Tokumx

Assumptions:

- Dedicated server for CMON Controller and UI. Do not colocate with Cluster

- Use the same OS for the Controller/UI server as for the Cluster. Do not mix. It is un-tested.

- We assume in this guide that you install as root. Read more on server requirements.

Outline of steps:

- Setup SSH to the cluster nodes

- Preparations of Monitored MySQL Servers (part of the cluster)

- Installation

- Add the existing cluster

Limitations:

- All nodes must be started and able to connect to. For Galera, all nodes must be SYNCED, when adding the existing cluster

- If controller is deployed on an IP address (hostname=<ipaddr> in /etc/cmon.cnf) and MySQL/Galera server nodes are deployed without skip_name_resolve then GRANTs may fail. http://54.248.83.112/bugzilla/show_bug.cgi?id=141

- GALERA: If wsrep_cluster_address contains a garbd, then the installation may fail.

- GALERA: Auto-detection of the Galera nodes is based on the wsrep_cluster_address. Atleast one Galera node must have wsrep_cluster_address=S1,..,Sn set, where S1 to Sn denotes the Galera nodes part of the Galera cluster.

Setup SSH from Controller -> Cluster, and Controller -> Controller

On the controller do, as user 'root':

ssh-keygen -trsa

(press enter on all questions)

For each cluster node do:

ssh-copy-id root@<cluster_node_ip>

On the controller do (controller needs to be able to ssh to itself):

ssh-copy-id root@<controller_ip>

SSH as a Non-root user

For now you need to setup passwordless sudo.

See Read more on server requirements.

Monitored MySQL Servers - GRANTs

This section applies to "Galera clusters" and "Collection of MySQL Servers".

The mysql 'root' user on the monitored MySQL servers must have the WITH GRANT OPTION specified.

On the monitored mysql servers you need to be able to connect like this

mysql -uroot -p<root password> -h127.0.0.1 -P3306 #change port from 3306 to the MySQL port you use.

mysql -root -p<root password> -P3306 #change port from 3306 to the MySQL port you use.

and NOT LIKE THIS:

mysql -uroot <--- INVALID EXAMPLE

If you cannot connect to the mysql server(s) like the first example above you need to do:

mysql> GRANT ALL ON *.* TO 'root'@'127.0.0.1' IDENTIFIED BY '<root password>' WITH GRANT OPTION;

mysql> GRANT ALL ON *.* TO 'root'@'localhost' IDENTIFIED BY '<root password>' WITH GRANT OPTION;

Installation

Follow the instructions below. It will install a MySQL Server as well (if none is installed already) to host the monitoring and management data, the UI, and the CMON Controller with a minimal configuration.

$ wget http://severalnines.com/downloads/cmon/install-cc.sh

$ chmod +x install-cc.sh

$ sudo ./install-cc.sh

A longer version of the instructions are located here: http://support.severalnines.com/entries/23737976-ClusterControl-Installation

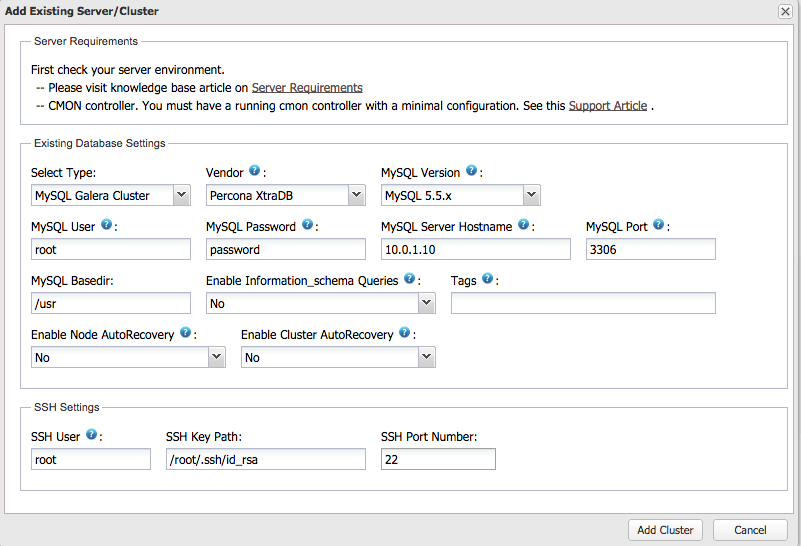

Add Existing Cluster

In the UI, press the button Add Existing Cluster.

- All cluster nodes must have the same OS

- All cluster nodes must be installed in the same place

- All cluster nodes must listen on the same mysql port

In the screenshot below you see an example, adding a Galera Cluster with one node running on 10.0.1.10 (the rest of the galera nodes are auto-detected).

The Galera node on 10.0.1.10 must have the wsrep_cluster_address set, and to verify that you can do (open a terminal on 10.0.1.10 first):

mysql -uroot -h127.0.0.1 -p

show global variables like 'wsrep_cluster_address';

mysql> show global variables like 'wsrep_cluster_address';

+-----------------------+------------------------------------------------------------+

| Variable_name | Value |

+-----------------------+------------------------------------------------------------+

| wsrep_cluster_address | gcomm://10.0.1.10,10.0.1.11,10.0.1.12 |

+-----------------------+------------------------------------------------------------+

1 row in set (0.01 sec)

Click "Add Cluster" and after a while the Cluster will appear, or else a "Job failed" will be shown on the screen and you can investigate what the problem is.

Comments

70 comments

I have run into a problem with adding an existing cluster, below is the output from ClusterControl progress:

Installation Progress

5886 - Message sent to controller

5887 - Verifying job parameters.

5888 - Verifying controller host and cmon password.

5889 - Verifying the SSH connection to 10.2.181.161.

5890 - Verifying the MySQL user/password.

5891 - Getting node list from the MySQL server.

5892 - Found 3 nodes.

5893 - Checking the nodes that those aren't in other cluster.

5894 - Verifying the SSH connection to the nodes.

5895 - Check SELinux statuses

5896 - Granting the controller on the cluster.

5897 - Detecting the OS.

5898 - Determining the datadir on the MySQL nodes.

Can't determine the datadir, connect failed: Access denied for user 'cmon'@'10.2.180.99' (using password: YES)

When I check grants on the target node I get the following:

node1 mysql> show grants for cmon@'10.2.180.99';

+------------------------------------------------------------------------------------------------------------------------------------------+

| Grants for cmon@10.2.180.99 |

+------------------------------------------------------------------------------------------------------------------------------------------+

| GRANT ALL PRIVILEGES ON *.* TO 'cmon'@'10.2.180.99' IDENTIFIED BY PASSWORD '*E8C5459B50EF1C73187CBEFB6D0FAF5C0F4E0812' WITH GRANT OPTION |

+------------------------------------------------------------------------------------------------------------------------------------------+

and the user can definitely connect and get the datadir from the ClusterControl host:

[root@galeracc01 ~]# mysql -u cmon -p -h 10.2.181.161

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 35

Server version: 5.5.36-MariaDB-wsrep-log MariaDB Server, wsrep_25.9.r3961

Copyright (c) 2000, 2014, Oracle, Monty Program Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> show global variables like 'data%';

+---------------+-----------------+

| Variable_name | Value |

+---------------+-----------------+

| datadir | /var/lib/mysql/ |

+---------------+-----------------+

1 row in set (0.01 sec)

I even tried removing the cmon user from the node in case there was a password issue, it was recreated by the Add Existing Cluster process when I ran it again.

Any help would be appreciated.

Hi,

Do you have a password containing "strange" characters, like $ ! & " ' | ?

Best regards

Johan

Sorry, I should have stated, the account is using the default cmon password.

I have the same problem... "Can't determine the datadir, connect failed: Host '172.16.120.43' is not allowed to connect to this MySQL server"...

HI,

Can you do from the controller ('172.16.120.43):

mysql -ucmon -p -h <the mysql server in question> -P3306 -e "show global variables like 'datadir'"

What do you get then?

What version of cmon are you using?

cmon --version

or (depending on OS and where it was installed):

/usr/local/cmon/sbin/cmon --version

Thanks

johan

Hi, thanks for your hints, but I reset my installation and start over again.

Now it seems to working... Thanks for your time...

Trying to add existing cluster, after configuring shhd_config and ssh keys i still getting this error:

61 - Message sent to controller

62 - Verifying controller host and cmon password.

63 - Verifying the SSH access to the controller.

64 - Could not SSH to controller host (10.40.191.7): libssh auth error: Access denied. Authentication that can continue: publickey (root, key=/root/.ssh/id_rsa)

Job failed

Did I missed out something? And yes, this is very basic but I cannot forward on my testing from this error.

Hi,

On the controller, can you do as user root:

ssh -v -i /root/.ssh/id_rsa root@10.40.191.7

What do you get?

BR

johan

Hi Johan,

The issue is now resolved. Port 22 was closed in destination. Also, proper file permission of the keys file.

On each nodes

#chmod 700 ~/.ssh/authorized_keys

#chmod 600 ~/.ssh/id_rsa.pub

#chmod -R go-wr ~/.ssh

My new encountered error after 3 nodes are detected when adding existing cluster is:

Can't SSH to 10.40.192.85?pc.wait_prim=no: hostname lookup failed: '10.40.192.85?pc.wait_prim=no' (in one node only)

Is it recommended to set this option to yes? Seems this is a good practice to ensure the server will start running even if it can't determine a primary node? if all members go down at the same time.

Thanks,

Cindy

Hi Cindy,

Can you go to (in the web browser):

http://clustercontroladdress/clustercontrol/admin/#g:jobs

Click on the failed job (add existing). Can you send the output from that job?

THanks

johan

Hi Cindy,

I believe we know the problem now.

This string: pc.wait_prim=no is at the end of the wsrep_cluster_addresses and cmon fails to parse it.

We will fix this.

Thanks a lot.

BR

johan

Here's the output from the job failed.

179: Can't SSH to 10.40.192.85?pc.wait_prim=no: hostname lookup failed: '10.40.192.85?pc.wait_prim=no'

178: Verifying the SSH connection to the nodes.

177: Checking the nodes that those aren't in another cluster.

176: Found in total 3 nodes.

175: Found node: 10.40.192.85?pc.wait_prim=no

174: Found node: 10.40.193.46

173: Found node: 10.40.191.170

172: Output: wsrep_cluster_address gcomm:// 10.40.191.170,10.40.193.46,10.40.192.85?pc.wait_prim=no SSH command: /usr/bin/mysql -u'root' -p '**********' -N -B -e "show global variables like 'wsrep_cluster_address';"

For the resolution, how long do you think it will be?

Thanks a lot Johan!

Regards,

Cindy

Hi Cindy:

Please do follow the instructions below (if you are on Ubuntu 12.04 then your wwwroot is /var/www instead of /var/www/html/):

REDHAT / CENTOS

wget http://www.severalnines.com/downloads/cmon/clustercontrol-controller-1.2.9-616-x86_64.rpm

wget http://www.severalnines.com/downloads/cmon/clustercontrol-cmonapi-1.2.9-26-x86_64.rpm

wget http://www.severalnines.com/downloads/cmon/clustercontrol-1.2.9-90-x86_64.rpm

sudo yum localinstall clustercontrol-controller-1.2.9-616-x86_64.rpm clustercontrol-1.2.9-90-x86_64.rpm clustercontrol-cmonapi-1.2.9-26-x86_64.rpm

UBUNTU / DEBIAN

wget http://www.severalnines.com/downloads/cmon/clustercontrol_1.2.9-91_x86_64.deb

wget http://www.severalnines.com/downloads/cmon/clustercontrol-controller-1.2.9-616-x86_64.deb

wget http://www.severalnines.com/downloads/cmon/clustercontrol-cmonapi_1.2.9-26_x86_64.deb

sudo dpkg -i clustercontrol*.deb

UPGRADE THE SCHEMA

mysql -ucmon -p -h127.0.0.1 cmon < /usr/share/cmon/cmon_db.sql

mysql -ucmon -p -h127.0.0.1 cmon < /usr/share/cmon/cmon_db_mods-1.2.8-1.2.9.sql

mysql -ucmon -p -h127.0.0.1 cmon < /usr/share/cmon/cmon_data.sql

mysql -ucmon -p -h127.0.0.1 cmon < /var/www/html/clustercontrol/sql/dc-schema.sql

RESTART CMON

sudo service cmon restart

Hi Johan,

It works!!! Thank you so much Johan! :)

Hi

please help, i have tried to find something in google, but nothing with 181 - Detecting the OS. Job failed.

162 - Message sent to controller

163 - Verifying controller host and cmon password.

164 - Verifying the SSH access to the controller.

165 - Verifying job parameters.

166 - Verifying the SSH connection to 10.36.0.90.

167 - Verifying the MySQL user/password.

168 - Getting node list from the MySQL server.

169 - Found node: '10.36.0.90'

170 - Found node: '10.36.0.92'

171 - Found node: '10.36.0.88'

172 - Found in total 3 nodes.

173 - Checking the nodes that those aren't in another cluster.

174 - Verifying the SSH connection to the nodes.

175 - Check SELinux statuses

176 - Detected that skip_name_resolve is not used on the target server(s).

177 - Granting the controller on the cluster.

178 - Node is Synced : 10.36.0.90

179 - Node is Synced : 10.36.0.92

180 - Node is Synced : 10.36.0.88

181 - Detecting the OS.

Job failed.

os ubuntu 14.04

sorry, was my mistake, like a **Can't determine the datadir, connect failed: Access denied for user 'cmon'@'10.2.180.99' (using password: YES).

**i use openvpn on controller, and i have not added the ip of controller

Hi, okay, did it proceed then to the end?

But we have a bug in the UI that it does not list ALL messages when it spits out "Job failed".

Best regards

Johan

yes, i have found message 'Can't determine the datadir, connect failed: Access denied for user 'cmon'@'' just in admin -> cluster jobs.

Hi Mick,

What did you do to fix this? I just want to understand if we did something wrong, or if we can improve somethings to make it easier.

BR

johan

Hi johan

i just add GRANT for user 'cmon'@'IP_OPENVPN_SERVER'

HI Johan,

We want to disable SSL3. Could you tell me which conf file is the one cmon is using SSL? I can’t find any SSLProtocol configuration on cmon server.

#vi /etc/httpd/conf.d/

Thanks,

Cindy

Hi Cindy,

It should be in /etc/httpd/conf.d/ssl.conf

Can you check there? Is this is enough info , else let me know and i will ask my colleagues for more advice on this.

BR

johan

Hi Johan,

Thank you! Another concern is, I tried to delete the first added cluster group in the controller but when re adding it I keep on receiving this output during installation progress (Adding Existing Cluster).

Installation Progress:

6101 - Message sent to controller

6102 - Verifying controller host and cmon password.

6103 - Verifying the SSH access to the controller.

6104 - Verifying job parameters.

6105 - Verifying the SSH connection to sgtl1db3.euwest.

6106 - Verifying the MySQL user/password.

6107 - Getting node list from the MySQL server.

6108 - Found node: '10.40.192.85'

6109 - Found node: '10.40.193.46'

6110 - Found node: '10.40.191.170'

6111 - Found in total 3 nodes.

6112 - Checking the nodes that those aren't in another cluster.

6113 - Host (10.40.192.85) is already in an other cluster.

I only have this addresses in my config file.

wsrep_cluster_address="gcomm://10.40.191.170,10.40.193.46,10.40.192.85?pc.wait_prim=no"

Regards,

Cindy

Hi Cindy,

when you deleted the first cluster, did you completely remove the cluster by ticking the box "completely remove the cluster from the controller"?

If you do on the controller:

mysql -ucmon -p -h127.0.01

use cmon;

select * from hosts;

select * from server_node;

select * from mysql_server;

Then do you see 10.40.192.85 or any other of the "old" servers there?

BR

johan

Hi Cindy,

also what version do you use (on the controller):

cmon --version

Best regards

johan

HI Johan,

Thank you for the fast reply. I'm not sure if check box is existing during the deletion. I just go to Action drop down list and clicked Delete Cluster then confirming Yes.

Do I need to truncate the tables to refresh the data? The cmon_version is 1.2.9.632.

Regards,

Cindy

Can I manually delete the record of the 3 db servers from these 3 tables in cmon database? Or if you could provide the script? Thanks!

Hi Cindy,

yes you can delete them. Unfortunately we don't have a script to auto-clean it up.

BR

johan

Hi Johan,

I have truncated the 3 tables but after re-adding the cluster, I can't see any data. Charts are not initialized, nodes are keeps on loading. The cluster was successfully added but seems it's not monitoring any cluster nodes. The application is very slow. Can you help me on this. Thanks!

Regards,

Cindy

Please sign in to leave a comment.